Welcome back! this will be the last post of this serie, making the final refactor to our application and tiding the loose ends to complete the ci/cd setup.

Refactor

Let’s start to tackle the issue prompted in the las post

but we are starting to have some duplicate code between the endpoints > for the rest api and the endpoint for some pages.

Since we added new endpoints, for each page, we dup some code to get the information from the database. We can resolve this decoupling the that logic from the endpoints and creating a new reusable layer.

The final goal is two have two separate layers, with a clear separation of roles and responsibilities between each one. For the purpose of this post we are going to use two layers, one for controllers and one for the business or domain logic.

So, let’s start with the controller layer creating a new directory inside src and two controller files. One for the views ( or pages ) and the other one for the api.

1

2

| mkdir controllers && cd controllers

touch {mod.rs,dino.rs,views.rs}

|

Now we can move the endpoint function to each one and declare the modules in our mod file.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

| // dino.rs

pub async fn create(mut req: Request<State>) -> tide::Result {

todo!();

}

pub async fn list(req: tide::Request<State>) -> tide::Result {

todo!();

}

pub async fn get(req: tide::Request<State>) -> tide::Result {

todo!();

}

pub async fn update(mut req: tide::Request<State>) -> tide::Result {

todo!();

}

pub async fn delete(req: tide::Request<State>) -> tide::Result {

todo!();

}

|

Great!, the idea behind this refactor is decoupling the endpoint logic to the one used to interact to the database. So, this functions will receive the request and call the other layer ( let’s call handler ) to make crud operations.

Now we need to create the new handler layer and expose the api to be used by the controllers. So again inside our src directory.

1

2

| mkdir handlers && cd handlers

touch {mod.rs,dino.rs}

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

| // handlers/dino.rs

pub async fn create(dino: Dino, db_pool: PgPool) -> tide::Result<Dino> {

todo!();

}

pub async fn list(db_pool: PgPool) -> tide::Result<Vec<Dino>> {

todo!();

}

pub async fn get(id: Uuid, db_pool: PgPool) -> tide::Result<Option<Dino>> {

todo!();

}

pub async fn delete(id: Uuid, db_pool: PgPool) -> tide::Result<Option<()>> {

todo!();

}

pub async fn update(id: Uuid, dino: Dino, db_pool: PgPool) -> tide::Result<Option<Dino>> {

todo!();

}

|

Each function will receive as argument an instance of the database pool and the needed arguments for the operation ( e.g uuid or dino ).

Now we can start filling the todo!() and implement the actual logic, here is an example of the create operation but you can check the PR to see the full code.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

| // controllers/dino.rs

use crate::handlers;

pub async fn create(mut req: Request<State>) -> tide::Result {

let dino: Dino = req.body_json().await?;

let db_pool = req.state().db_pool.clone();

let row = handlers::dino::create(dino, db_pool).await?;

let mut res = Response::new(201);

res.set_body(Body::from_json(&row)?);

Ok(res)

}

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

| pub async fn create(dino: Dino, db_pool: PgPool) -> tide::Result<Dino> {

let row: Dino = query_as!(

Dino,

r#"

INSERT INTO dinos (id, name, weight, diet) VALUES

($1, $2, $3, $4) returning id, name, weight, diet

"#,

dino.id,

dino.name,

dino.weight,

dino.diet

)

.fetch_one(&db_pool)

.await

.map_err(|e| Error::new(409, e))?;

Ok(row)

}

|

Great! After complete the implementation of the others functions we can move on and complete our view controller.

We have 3 views ( index, new and edit )

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

| // controllers/views.rs

pub async fn index(req: Request<State>) -> tide::Result {

let tera = req.state().tera.clone();

let db_pool = req.state().db_pool.clone();

let rows = handlers::dino::list(db_pool).await?;

tera.render_response(

"index.html",

&context! {

"title" => String::from("Tide basic CRUD"),

"dinos" => rows

},

)

}

pub async fn new(req: Request<State>) -> tide::Result {

let tera = req.state().tera.clone();

tera.render_response(

"form.html",

&context! {

"title" => String::from("Create new dino")

},

)

}

pub async fn edit(req: Request<State>) -> tide::Result {

let tera = req.state().tera.clone();

let db_pool = req.state().db_pool.clone();

let id: Uuid = Uuid::parse_str(req.param("id")?).unwrap();

let row = handlers::dino::get(id, db_pool).await?;

let res = match row {

None => Response::new(404),

Some(row) => {

let mut r = Response::new(200);

let b = tera.render_body(

"form.html",

&context! {

"title" => String::from("Edit dino"),

"dino" => row

},

)?;

r.set_body(b);

r

}

};

Ok(res)

}

|

Awesome! each one now call the handler to retrieve the dinos information. We are almost there, we need now to clean our main file and add tide the routes to the controllers. We can start by removing all the restEntity logic in main and just tide the routes to the controller functions in our server fn

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

| // views

app.at("/").get(views::index);

app.at("/dinos/new").get(views::new);

app.at("/dinos/:id/edit").get(views::edit);

// api

app.at("/dinos").get(dino::list).post(dino::create);

app.at("dinos/:id")

.get(dino::get)

.put(dino::update)

.delete(dino::delete);

app.at("/public")

.serve_dir("./public/")

.expect("Invalid static file directory");

app

|

Great! so, we can now run the tests

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

| cargo test

running 10 tests

test tests::clear ... ok

test tests::delete_dino ... ok

test tests::create_dino_with_existing_key ... ok

test tests::delete_dino_non_existing_key ... ok

test tests::create_dino ... ok

test tests::get_dino ... ok

test tests::update_dino ... ok

test tests::get_dino_non_existing_key ... ok

test tests::list_dinos ... ok

test tests::updatet_dino_non_existing_key ... ok

test result: ok. 10 passed; 0 failed; 0 ignored; 0 measured; 0 filtered out

|

Nice! we almost finish the refactor, we still have some code to clean and remove unused imports.

CI/CD

Next, we need to finish the pipeline for CI/CD, we are already running the test in GH Actions so this part is cover but we want to setup the cd part for deploy our app in each commit to main.

Updating sqlx

First let’s start to update sqlx to enable offline mode and allow us to build our app without connecting to the database.

1

2

| // Cargo.toml

sqlx = { version = "0.4.2", features = ["runtime-async-std-rustls", "offline", "macros", "chrono", "json", "postgres", "uuid"] }

|

We need to add the offline feature and change runtime-async-std for one of the supported ones in the new version ( in our case runtime-async-std-rustls ).

Also, we need to make a couple of changes in our code to work with the new version:

- Pool::new is now replaced by Pool::connect

1

2

3

| pub async fn make_db_pool(db_url: &str) -> PgPool {

Pool::connect(db_url).await.unwrap()

}

|

- We need to use

as "id!" in the query for inserting a Dino to prevent the inferred field type (Option)

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

| // use as "id!" here to prevent sqlx use the inferred ( nulleable ) type

// since we know that id should not be null

// see : https://github.com/launchbadge/sqlx/blob/master/src/macros.rs#L482

pub async fn create(dino: Dino, db_pool: &PgPool) -> tide::Result<Dino> {

let row: Dino = query_as!(

Dino,

r#"

INSERT INTO dinos (id, name, weight, diet) VALUES

($1, $2, $3, $4) returning id as "id!", name, weight, diet

"#,

dino.id,

dino.name,

dino.weight,

dino.diet

)

.fetch_one(db_pool)

.await

.map_err(|e| Error::new(409, e))?;

Ok(row)

}

|

Great! now we can install the sqlx-cli and generate the needed file to build without need a db connection.

1

2

3

4

| cargo install sqlx-cli

cargo sqlx prepare

(...)

query data written to `sqlx-data.json` in the current directory; please check this into version control

|

Nice! we can now build our code with out a db connection :)

Selecting a provider

For deploy our app we will use qovery, they offer container as a service and his tag line are The simplest way to deploy your full-stack apps. Also, they have a community plan so let’s try and see how it’s go.

For deploy our app we need to files:

- Dockerfile : for build and run our application

- .qovery.yml : fir describe the needed infra of our project.

For the Dockerfile we will use a template from cargo chef enabling multi-stage builds.

You can check the complete files in the repo .

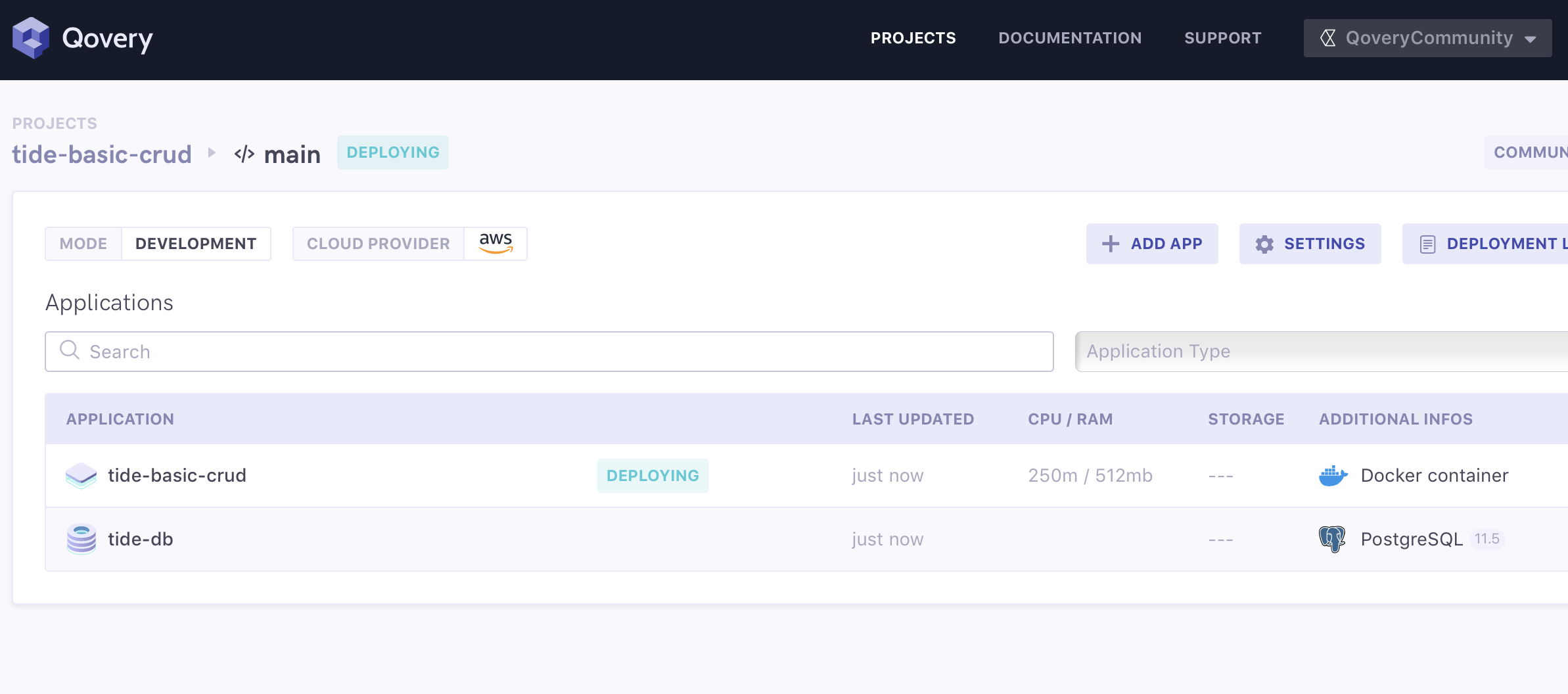

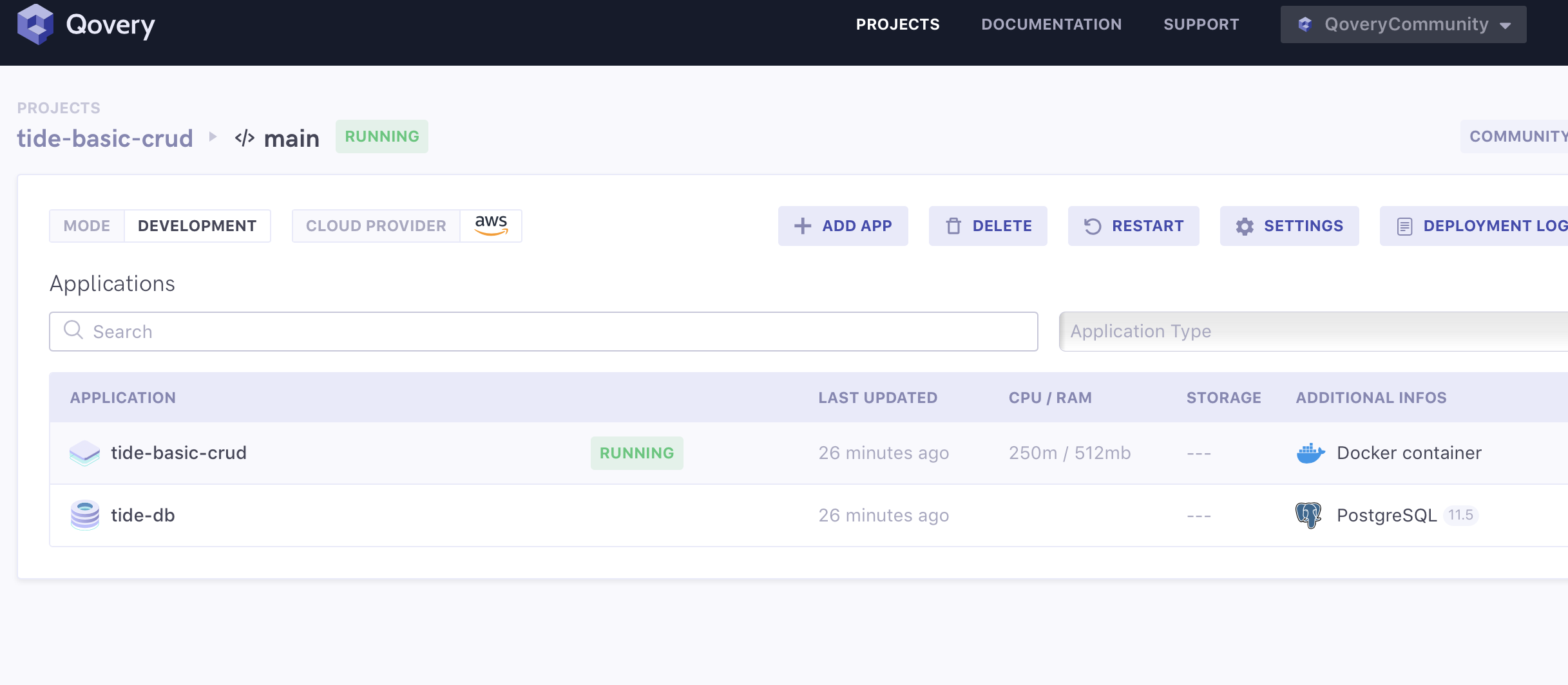

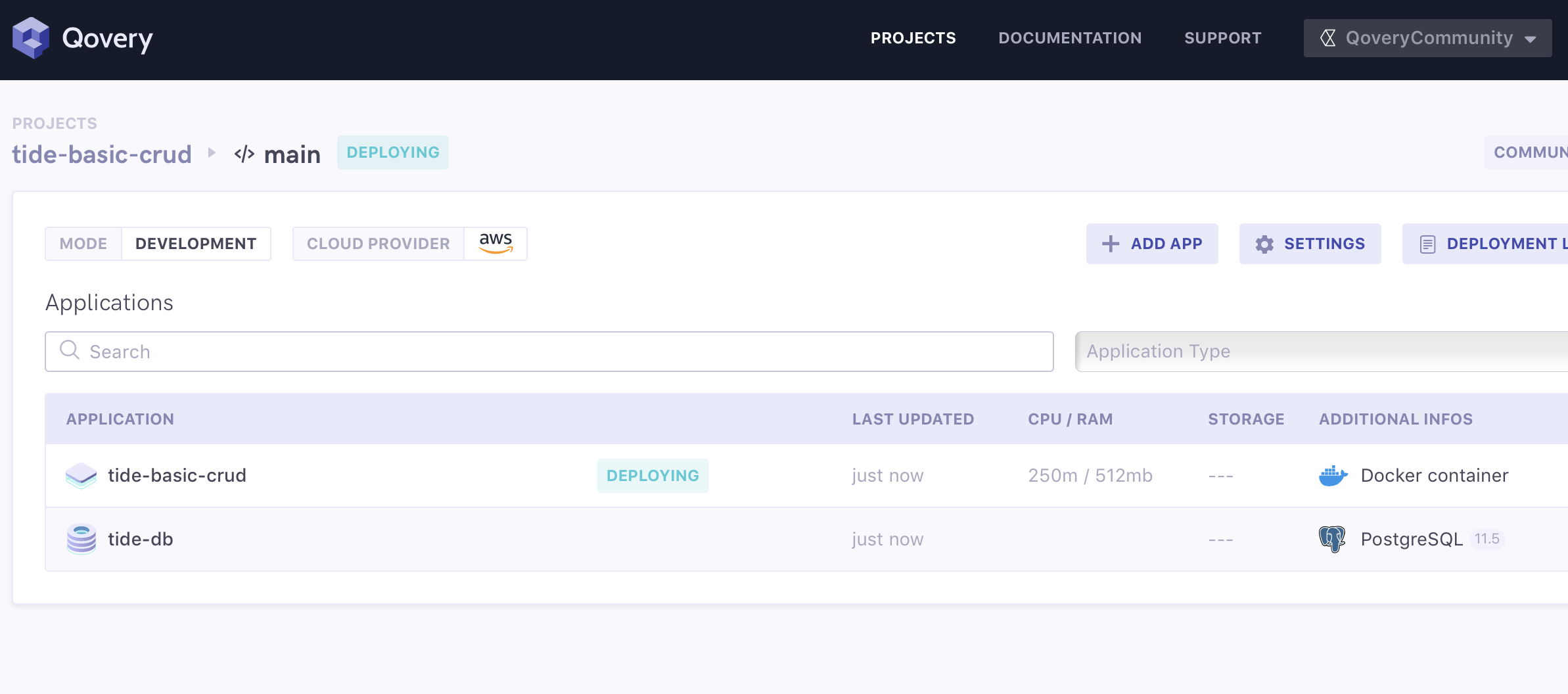

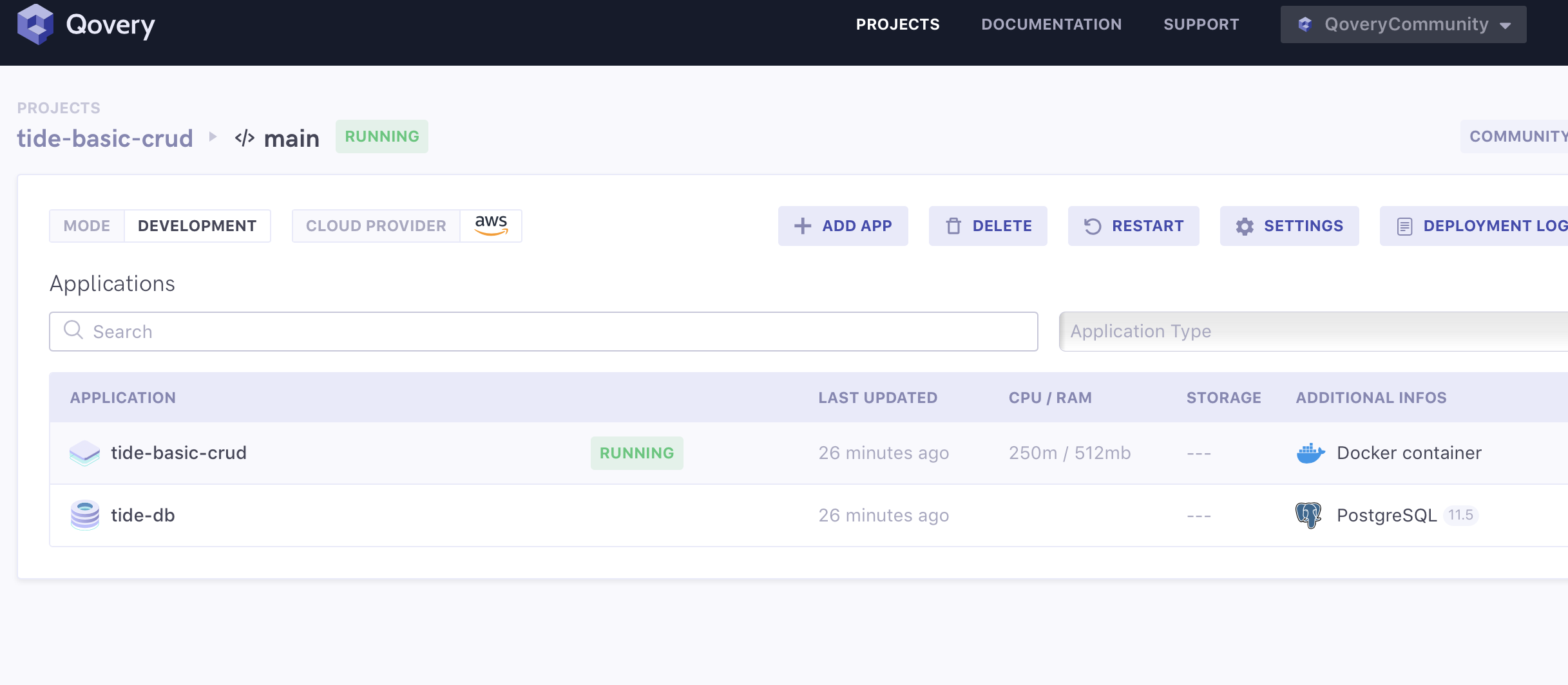

Now, we can see the deployment in action in the qovery console

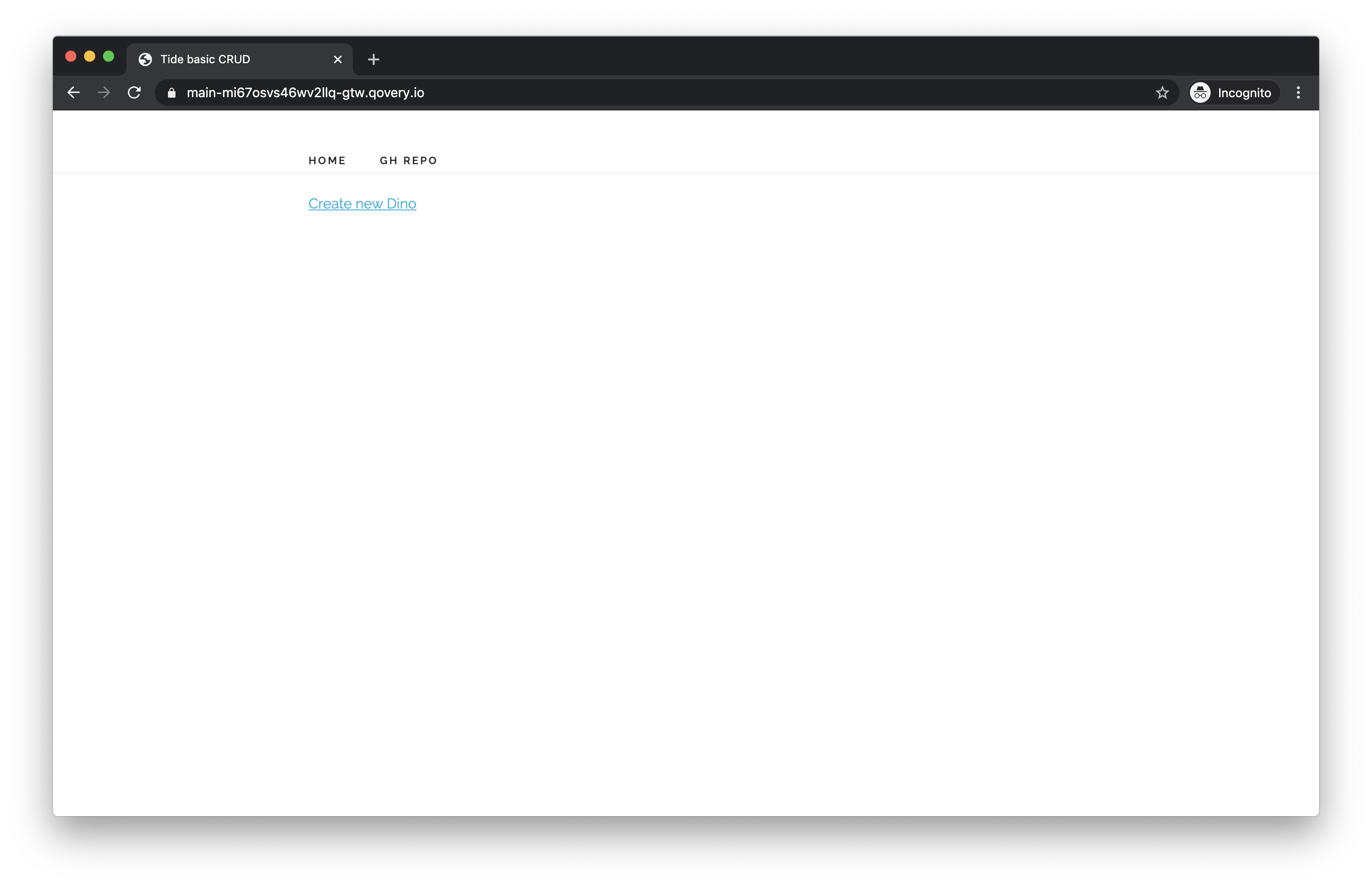

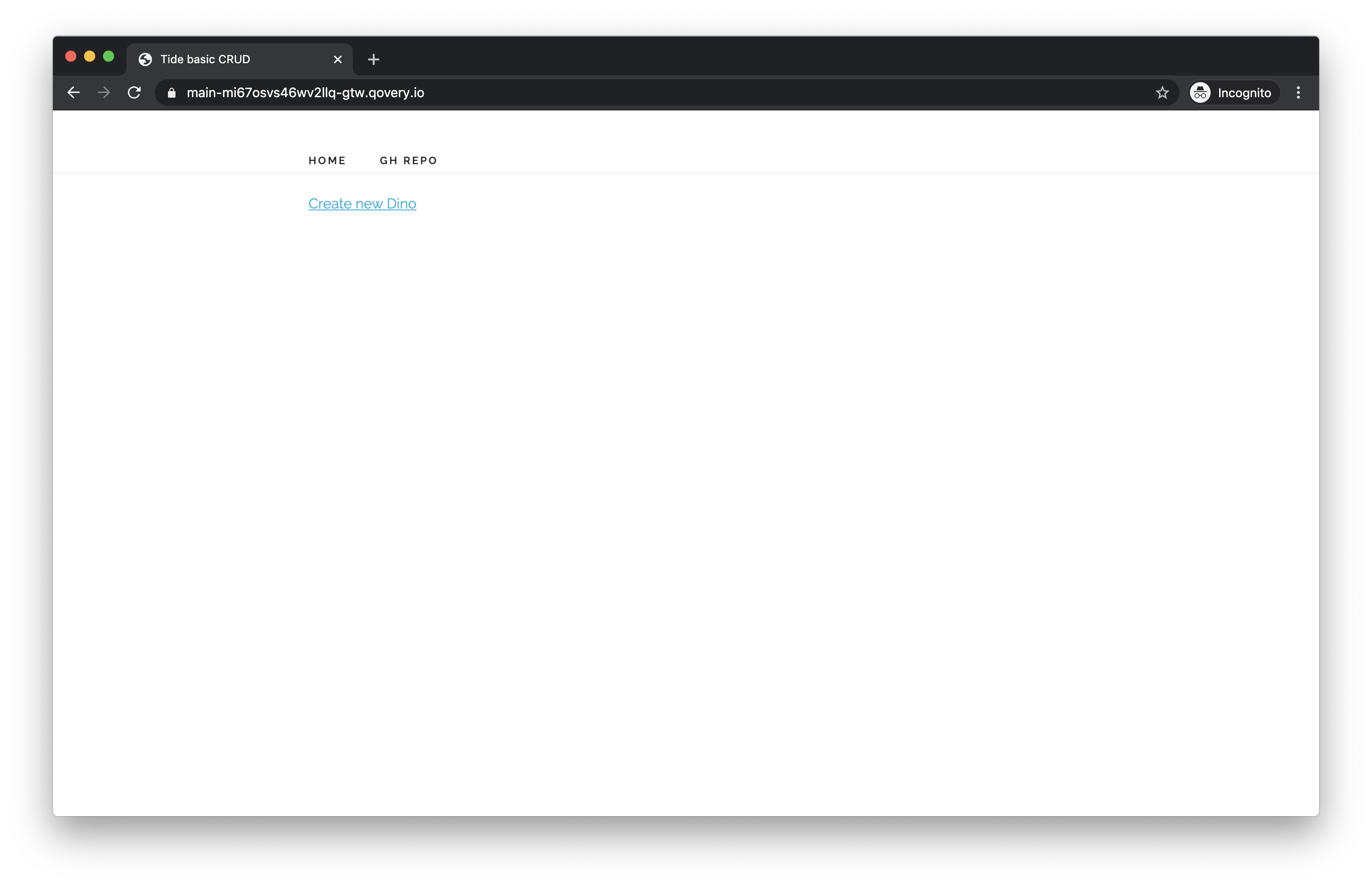

And each commit will trigger a new build of our app, that is now live :-)

That’s all for today and for this series, I think there are still room to improve but we can leave that for the future since we have now a minimal working CRUD with both api and front-end and the CI/CD in place :-)

In the future posts I will be exploring web-sockets with tide.

As always, I write this as a learning journal and there could be another more elegant and correct way to do it and any feedback is welcome.

Thanks!